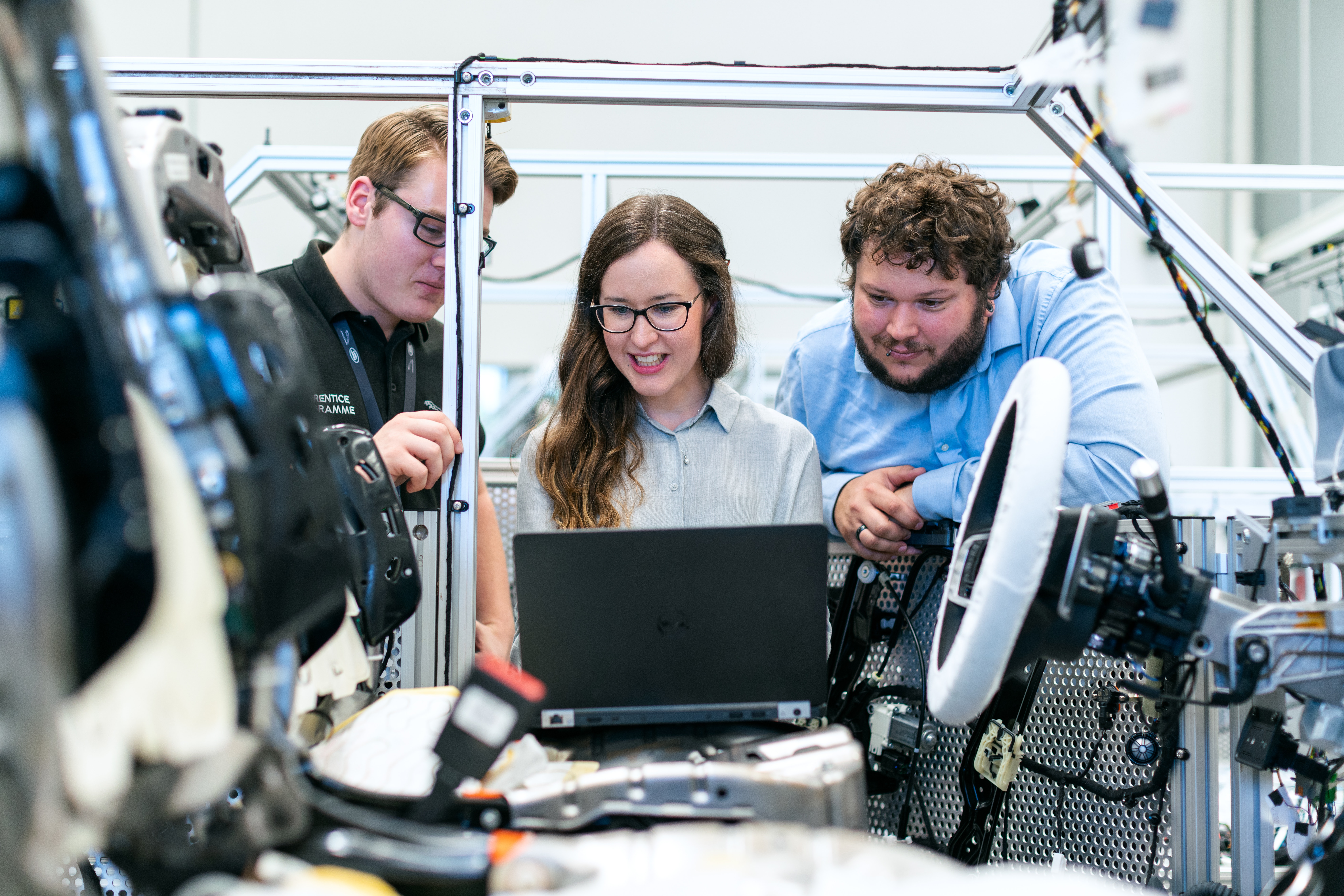

Artificial intelligence is no longer reserved for consumer tech or cloud services. In 2025, AI is being deeply integrated into railway signaling, driver monitoring systems, and even predictive maintenance on embedded platforms.

However, integrating AI into ASPICE-compliant V-model workflows raises a fundamental question:

How do you trace, verify, and validate something that learns and adapts?

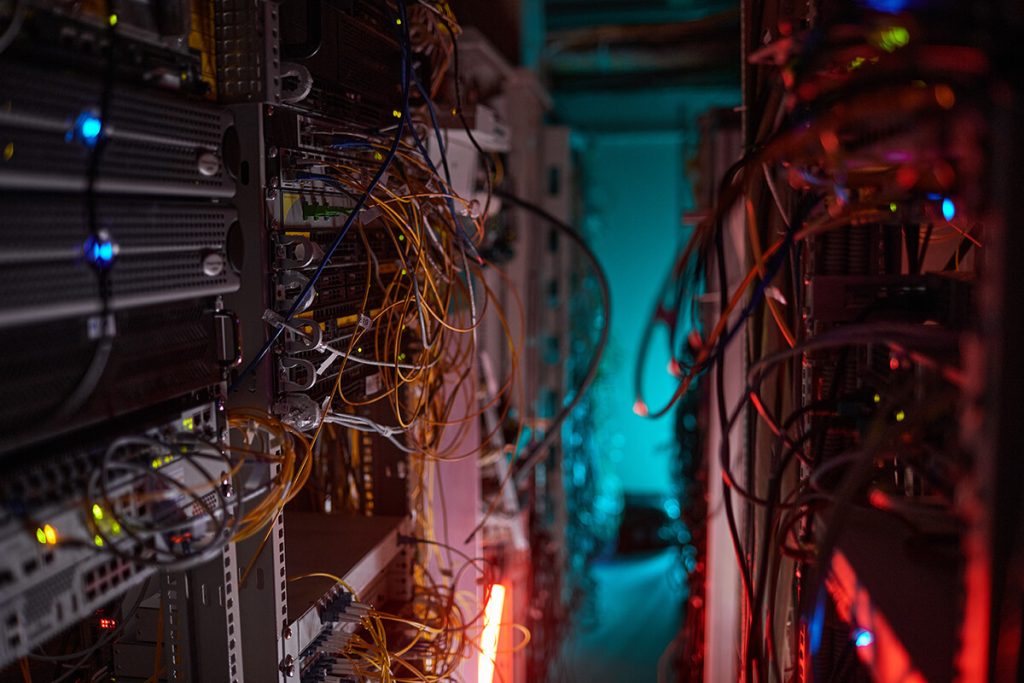

In traditional embedded systems, the V-model provides structure: define requirements, derive architecture, implement, and validate back. But AI—especially ML/DL—often behaves as a black box, with data-driven logic that doesn’t follow fixed control flows.

Standards like EN 50126 (railway) or ISO 26262 (automotive) demand traceability, testability, and determinism. So how do we manage this when using:

-

CNNs in object detection

-

LSTM models for predictive diagnosis

-

Transformers in natural language interfaces

Do these components even fit into SYS.1/SYS.2/SYS.3?

For sectors like railway and automotive, where safety and regulatory compliance are non-negotiable, this is more than a technical nuance—it’s an existential challenge.

-

We cannot just trust the AI.

-

We must show why it works, and how we know it won’t fail in critical conditions.

To integrate AI without breaking compliance, we must adapt our approach:

-

Use explainable AI (XAI) techniques to derive traceable behavior.

-

Embed data-centric validation processes into SYS.2.

-

Treat trained models as software units with formal specifications (as per ISO PAS 8800 emerging practices).

-

Add data quality requirements into SYS.1.

-

Model training pipelines and trace them like we trace software builds.

And most importantly: align AI development with ASPICE base practices, especially in SYS.2.BP4 (System Design Specification) and SYS.3.BP2 (Verification against System Requirements).

Those who do it right gain:

-

Early identification of hidden risks in AI components

-

Better confidence during safety assessments

-

Higher customer trust (TIER1/OEM relationships)

-

Compatibility with EN50126/EN50129 or ISO 26262 audits

-

Ability to reuse AI components across projects with formal documentation

Challenge 1: “My neural net can’t be specified in SysML!”

Solution: Define the function and performance envelope as system requirements; trace to trained models implementation artifact.

Challenge 2: “The data distribution is always changing!”

Solution: Introduce data shift monitoring and define limits in SYS.1. This is your validation criterion.

Challenge 3: “AI testing doesn’t fit into our current toolchain!”

Solution: Use ASPICE test frameworks (e.g., robustness testing, adversarial inputs) and integrate results into standard V&V reports.

AI is not incompatible with the V-model. But it requires adaptation, not rejection.

At Kentia, we work with clients across railway, automotive, and defense, helping them define, trace, and validate AI-powered systems without breaking ASPICE or compromising safety.

#KentiaEngineering #AIoTEmbedded #ASPICE #EN50126 #ISO26262 #SYS1SYS2SYS3 #FunctionalSafety #RailwayTech #AutomotiveAI #MBSE #Vmodel #XAI #SafetyCriticalSystems